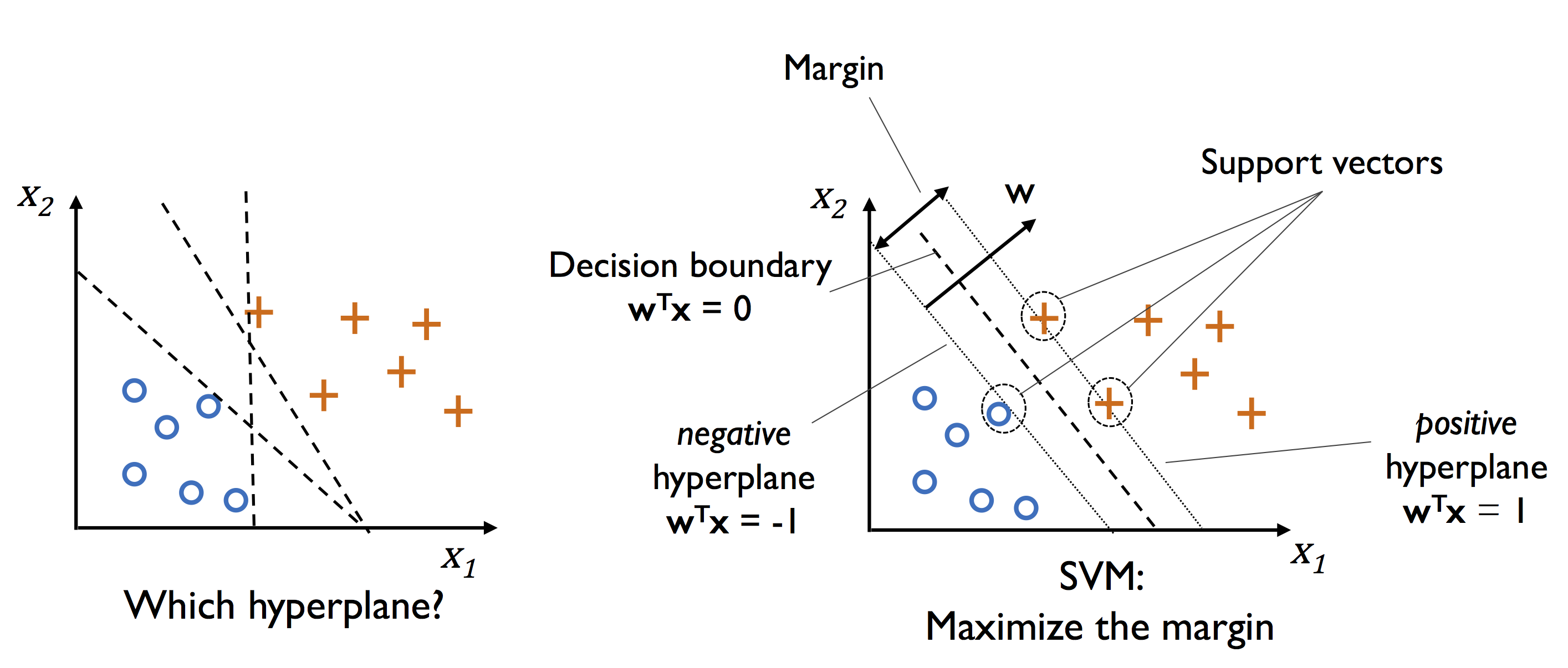

class: center, middle, inverse, title-slide # Introduction to Support Vector Machines & Random Forest ## Supervised Learning ### Cédric Hassen-Khodja, Volker Baeker, Jean Bernard Fiche, Francesco Pedaci ### CNRS BioCampus MRI ### 2020/10/26 (updated: 2021-05-24) --- # Summary 1. History of SVM 2. Types Of Machine Learning 3. Why support vector machine ? 4. What is support vector machine ? 5. How does it work ? a. Hard Margin b. Soft Margin c. Kernel trick 6. Evaluating model performance 7. Improving model performance 8. SVM in practice - Implementing biological application with Python a. Use Case - Problem Statement b. Use Case - Translocation Activity --- #1. History Of SVM 1. *1963*: Linear classifier - <u>Maximal Margin</u> Classifier by Vapnik and Chervonenkis. -- 1. *1992*: Nonlinear classification – <u>Kernel</u> trick by Bernhard E. Boser. -- 1. *1995*: The <u>Soft Margin</u> Classifier by Corinna Cortes and Vapnik. --- #2. Types of Machine Learning <div id="htmlwidget-e81f6715c7f87f6e37df" style="width:100%;height:504px;" class="DiagrammeR html-widget"></div> <script type="application/json" data-for="htmlwidget-e81f6715c7f87f6e37df">{"x":{"diagram":"\n graph TD\n A{<b>Machine Learning<\/b>} --> B{<b>Supervised Learning<\/b>}\n A --> C(<b>Unsupervised Learning<\/b>)\n A --> D(<b>Reinforcement Learning<\/b>)\n B --> E[<b>Classification<\/b>]\n B --> F[<b>Regression<\/b>]\n E --> G(<b>SVM<\/b>)\n F --> G\n style A fill:#E5E25F\n style B fill:#E5E25F\n style C fill:#87AB51\n style D fill:#87AB51\n style G fill:#87AB51\n style E fill:#B6E6E6\n style F fill:#B6E6E6\n"},"evals":[],"jsHooks":[]}</script> --- #3. Why support vector machine ? * It works really well with clear margin of separation. * It is effective in high dimensional spaces. * Robust against the outliers (controlled with the parameter C). --- #4. What is support vector machine ? Support vector machines (SVMs) aim to find a decision **hyperplane** that separates data points of different classes with a **maximal margin**.  <!-- # What is support vector machine ? --> <!-- ### a. Mathematics behind Maximum margin --> <!-- `$$w_0 + w^Tx_{pos} = 1$$` --> <!-- `$$w_0 + w^Tx_{neg} = -1$$` --> <!-- `$$=> w^T(x_{pos} - x_{neg}) = 2$$` --> <!-- `$$||w|| = \sqrt{\sum_{j-1}^{m}{w_j^2}}$$` --> <!-- `$$\frac{w^T(x_{pos} - x_{neg})}{||w||} = \frac{2}{||w||}$$` --> <!-- `$$min\frac{1}{2}||w||^2\\S.t. y_i(w_0 + w^Tx_i) \ge 1\ ∀_i$$` --> --- #5. How does it work ? We are given a set of people with different: Height | Weight | Sex ------ | ------ | ---- 145 | 55 | Woman 155 | 50 | Woman 160 | 52 | Woman 158 | 68 | Woman 174 | 74 | Man 170 | 86 | Man 180 | 62 | Man 185 | 78 | Man --- #5. How does it work ? <img src="intro_files/figure-html/svm1-1.svg" width="70%" /> --- #5. How does it work ? ## a. Hard Margin To separate the two classes we should split the data in the best possible way. <img src="intro_files/figure-html/svm2-1.svg" width="50%" /><img src="intro_files/figure-html/svm2-2.svg" width="50%" /> --- #5. How does it work ? ## a. Hard Margin <img src="intro_files/figure-html/svm3-1.svg" width="70%" /> --- #5. How does it work ? ## b. Soft Margin <img src="./figures/soft-margin.png" width="50%" /> --- #5. How does it work ? ## b. Soft Margin Height | Weight | Sex ------ | ------ | ---- 150 | 52 | Man 145 | 55 | Woman 155 | 50 | Woman 160 | 52 | Woman 158 | 68 | Woman 174 | 74 | Man 170 | 86 | Man 180 | 62 | Man 185 | 78 | Man 182 | 70 | Woman --- #5. How does it work ? ## b. Soft Margin <img src="intro_files/figure-html/svm5-1.svg" width="70%" /> --- #5. How does it work ? ## b. Soft Margin <img src="./figures/ksvm_output.png" width="50%" /><img src="intro_files/figure-html/svm6-2.svg" width="50%" /> --- #5. How does it work ? ## b. Soft Margin <img src="intro_files/figure-html/svm7-1.svg" width="50%" /><img src="intro_files/figure-html/svm7-2.svg" width="50%" /> --- #5. How does it work ? ## b. Soft Margin <img src="intro_files/figure-html/svm8-1.svg" width="50%" /><img src="intro_files/figure-html/svm8-2.svg" width="50%" /> --- #5. How does it work ? ## c. Kernel trick <img src="./figures/kernel-1.png" width="65%" /> --- #5. How does it work ? ## c. Kernel trick <img src="./figures/kernel-2.png" width="80%" /> --- #6. Evaluating model performance Height | Weight | Sex ------ | ------ | ---- 174 | 76 | Woman 184 | 77 | Man 194 | 50 | Man 159 | 90 | Woman 157 | 58 | Woman --- #6. Evaluating model performance <img src="./figures/eval.png" width="50%" /><img src="intro_files/figure-html/svm12-2.svg" width="50%" /> --- #7. Improving model performance * Changing the SVM kernel function * Changing the SVM cost parameter --- #8. SVM in practice - Implementing biological application with Python ## Use Case - Problem Statement Classify the cells based on the features. This image set is of a Transfluor assay where an orphan GPCR is stably integrated into the b-arrestin GFP expressing U2OS cell line. Channel 1 = FKHR-GFP; Channel 2 = DNA <img src="./figures/svm_workflow.png" width="73%" /> --- #8. SVM in practice - Implementing biological application with Python ## Use Case - Translocation Activity <img src="./figures/step_by_step.png" width="90%" /> --- #8. SVM in practice - Implementing biological application with Python ## Use Case - Translocation Activity <img src="./figures/step_by_step_2.png" width="100%" /> --- # Resources 1- Cortes, C., Vapnik, V. Support-vector networks. Mach Learn 20, 273–297 (1995). https://doi.org/10.1007/BF00994018 2- Bennett, K. and C. Campbell. “Support vector machines: hype or hallelujah?” SIGKDD Explor. 2 (2000): 1-13. 3- Steinwart, I. and Christmann, A. (2008) Support Vector Machines. Springer-Verlag, New York. 4- Vapnik, Vladimir. (2000). The Nature of Statistical Learning Theory. 10.1007/978-1-4757-3264-1_1. 5- Burges, C.. “A Tutorial on Support Vector Machines for Pattern Recognition.” Data Mining and Knowledge Discovery 2 (2004): 121-167. --- # Summary 1. History of Random Forest 2. Types Of Machine Learning 3. Why Random Forest ? 4. What is Random Forest ? 5. Decision Tree 6. How Does a decision tree work ? 7. How Does a Random Forest work ? 8. Random Forest in practice - Implementing biological application with CellProfiler Analyst a. Use Case - Problem Statement --- #1. History Of Random Forest 1. *1997*: In an important paper on written character recognition, Amit and Geman define a large number of geometric features and search over a random selection of these for the best split at each node. -- 1. *1998*: Ho has written a number of papers on "the random subspace" method which does a random selection of a subset of features to use to grow each tree. -- 1. *2001*: The introduction of random forests proper was rst made in a paper by Leo Breiman. This paper describes a method of building a forest of uncorrelated trees using a CART like procedure, combined with randomized node optimization and bagging. --- #2. Types of Machine Learning <div id="htmlwidget-18fda988e6324b0b40d9" style="width:100%;height:504px;" class="DiagrammeR html-widget"></div> <script type="application/json" data-for="htmlwidget-18fda988e6324b0b40d9">{"x":{"diagram":"\n graph TD\n A{<b>Machine Learning<\/b>} --> B{<b>Supervised Learning<\/b>}\n A --> C(<b>Unsupervised Learning<\/b>)\n A --> D(<b>Reinforcement Learning<\/b>)\n B --> E[<b>Classification<\/b>]\n B --> F[<b>Regression<\/b>]\n E --> G(<b>Random Forest<\/b>)\n F --> G\n style A fill:#E5E25F\n style B fill:#E5E25F\n style C fill:#87AB51\n style D fill:#87AB51\n style G fill:#87AB51\n style E fill:#B6E6E6\n style F fill:#B6E6E6\n"},"evals":[],"jsHooks":[]}</script> --- #3. Why Random Forest ? <b>No Overfitting</b>: * Number of trees increase * Training time is less <b>High Accuracy</b>: * Run efficiently on large database <b>Missing data</b>: * Accuracy when large proportion of data is missing --- #4. What is Random Forest ? <p> Random Forest creates multiple Decision Trees during training phase. The Decision of the majority of the trees is chosen by the random forest as the final decision. </p> <img src="./figures/what_RF.png" width="100%" /> --- #5. Decision Tree <p>Decision Tree is a tree shaped diagram. Each branch of the tree is an action and each node as a result of the decision taken.</p> <img src="./figures/DT_1.png" width="45%" /> --- #5. Decision Tree <img src="./figures/DT_2.png" width="100%" /> --- #5. Decision Tree <img src="./figures/DT_3.png" width="100%" /> --- #5. Decision Tree <img src="./figures/DT_4.png" width="100%" /> --- #5. Decision Tree ## Calculate entropy `$$Entropy = -\sum P(X)log_2P(X)$$` where p(x) is a fraction of a given class `$$P_L = \frac{3}6 = 0.5$$` `$$P_A = \frac{3}6 = 0.5$$` `$$E_1 = - \sum P_Llog_2(P_L) + P_Alog_2(P_A)$$` --- #5. Decision Tree ## Calculate entropy `$$P_L = \frac{1}3 = 0.334$$` `$$P_A = \frac{2}3 = 0.667$$` `$$E_L = - (0.334 log_2(0.334) + 0.667 log_2(0.667)) = -(-0.52+ (-0.38)) = 0.9$$` `$$E_R = - (0.334 log_2(0.334) + 0.667 log_2(0.667)) = -(-0.52+ (-0.38)) = 0.9$$` `$$E_2 = \frac{C_{ic}}{C_{ip}} * E_L + \frac{C_{ic}}{C_{ip}} * E_R$$` `$$E_2 = \frac{3}6 * 0.9 + \frac{3}6 * 0.9 = 0.9$$` --- #5. Decision Tree <img src="./figures/DT_5.png" width="100%" /> --- #5. Decision Tree <img src="./figures/DT_6.png" width="100%" /> --- #5. Decision Tree ## Information Gain `$$IG = E_1 - E_2 = 1-0.9 = 0.10$$` --- #5. Decision Tree <img src="./figures/DT_26.png" width="100%" /> --- #5. Decision Tree <img src="./figures/DT_7.png" width="100%" /> --- #6. How Does a decision tree work ? <img src="./figures/DT_10.png" width="100%" /> --- #6. How Does a decision tree work ? <img src="./figures/DT_11.png" width="100%" /> --- #6. How Does a decision tree work ? <img src="./figures/DT_12.png" width="100%" /> --- #6. How Does a decision tree work ? <img src="./figures/DT_14.png" width="100%" /> --- #6. How Does a decision tree work ? <img src="./figures/DT_15.png" width="100%" /> --- #6. How Does a decision tree work ? <img src="./figures/DT_16.png" width="100%" /> --- #7. How Does a random forest work ? <img src="./figures/DT_20.png" width="100%" /> --- #7. How Does a random forest work ? <img src="./figures/DT_21.png" width="100%" /> --- #7. How Does a random forest work ? <img src="./figures/DT_24.png" width="100%" /> --- #7. How Does a random forest work ? <img src="./figures/DT_25.png" width="100%" /> --- ##8. RF in practice - Implementing biological application with CellProfiler Analyst ### Use Case - Problem Statement Estimate the lowest dose necessary to induce the cytoplasm to nucleus translocation of the transcription factor NFkB in A549 (human alveolar basal epithelial) cells in response to `\(TNF\alpha\)` concentration. Channel 1 = FITC; Channel 2 = DAPI. <img src="./figures/rf_workflow.png" width="75%" /> --- # Resources 1- Mingers, J. An empirical comparison of selection measures for decision-tree induction. Mach Learn 3, 319–342 (1989). https://doi.org/10.1007/BF00116837 2- F. Esposito, D. Malerba, G. Semeraro and J. Kay, "A comparative analysis of methods for pruning decision trees," in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 19, no. 5, pp. 476-491, May 1997, doi: 10.1109/34.589207. 3- Robert E. Schapire and Yoav Freund. 2012. Boosting: Foundations and Algorithms. The MIT Press. 4- Breiman, L. Bagging predictors. Mach Learn 24, 123–140 (1996). https://doi.org/10.1007/BF00058655. 5- Breiman, L. Random Forests. Machine Learning 45, 5–32 (2001). https://doi.org/10.1023/A:1010933404324.